Volume 2, Year 2014 - Pages 28-36

DOI: 10.11159/vwhci.2014.004

"Like a Family Member Who Takes Care of Me"

Users' Anthropomorphic Representations and Trustworthiness of Smart Home Environments

Oliver Sack¹*, Carsten Röcker²

¹Department of Psychology, RWTH Aachen University, Jaegerstraße 17-19,

Aachen, 52066, Germany

oliver.sack@psych.rwth-aachen.de

²Human-Computer Interaction Center (HCIC), Theaterplatz 14, Aachen, 52056, Germany

Abstract - Within the last years the concept of trust has attracted increased attention in the field of smart home environments. However, little is known about what determines trustworthiness in this context. For this reason the objective was to examine mental models in terms of anthropomorphic perception of smart home environments and its relation to trustworthiness. Two studies (N=36) were carried out in the Future Care Lab, a simulated intelligent home environment. We used the teach-back method to help participants to talk about the smart home environment technology and asked to generate a metaphor of an experienced home-monitoring scenario. Finally, we applied linguistic analysis of responses to detect anthropomorphic characteristics. In general, results demonstrate inspiring metaphors related to the personal assistance system, e.g. "like an airbag…" or "like a family member…", which might be useful for future interface designs and approaches of communication in the context of smart home environments. However, no relation of anthropomorphism and trustworthiness could be found. Therefore, we suggest an anthropomorphic threshold, which should be investigated by using an improved method and trust scale.

Keywords: Smart environment, e-health, user study, mental model, anthropomorphism, metaphor, technology acceptance, trust, evaluation.

© Copyright 2014 Authors - This is an Open Access article published under the Creative Commons Attribution License terms. Unrestricted use, distribution, and reproduction in any medium are permitted, provided the original work is properly cited.

Date Received: 2013-10-09

Date Accepted: 2014-02-19

Date Published: 2014-03-15

1. Introduction

Smart environments in a medical context provide a wide range of services that support people in their everyday lives [1], assist in sustaining the autonomy and quality of life of older adults [2] and further more help, in the long run, to limit the cost of medical care. While at the same time the highly technological features of smart environments, like for example sensors, do not necessarily impose themselves on the user as they are in many cases hardly noticeable. As a matter of fact, smart environments collect a vast amount of information, which "has led to growing concerns about the privacy, security and trustworthiness of such systems and the data they hold" ([3], p.1). Hence, attention should be shifted to these determinants in order to ensure a positive usage behavior [4, 5, 6, 7].

1.1. Trust as a Critical Factor

Several research frameworks, a for example the Ubiquitous Computing Acceptance Model [8] and Technology Acceptance Model for Mobile Services [9], underline the critical role of trust in smart environments. The Ubiquitous Computing Acceptance Model (UCAM), in which the concept of trust plays a central role, explains (a) how privacy, security and trust are linked to one another; and (b) how trust is related with usage intention. Shin [8] considers "trust […] as an antecedent variable to attitudes" (p.173). Subsequently attitudes are positively linked with behavioral intention. Additionally, the critical role of trust on usage intention was already mentioned by Kaasinen [9] who developed the Technology Acceptance Model for Mobile Services (TAMM) as a modification of Technology Acceptance Model (see [10]). TAMM puts the focus on trust and ease of adoption "that affect the intention to use" ([9], p.71). In the following we will use trust as a combination of positive beliefs in the technology, provider and reliance of the system [9, 11].

1.2. Anthropomorphism and Users' Trust

If we want to understand users' trust towards smart home environments, we have to consider the mental models, which users have of such environments. However most striking, when first getting involved with mental models, is the fact that there does not seem to be a unified terminology. Further in the field of human-computer interaction several approaches have been applied to infer what mental models users hold e.g., observing users while interacting with the system [16], gathering performance data [13], think-aloud protocols [14], teach-back data [15, 16] or metaphor and analogy [17]. The later might be useful to investigate how users perceive a technology. For instance perceiving a technology could include human-like characteristics that one "features to animals, computers and religious entities" ([18], p.1). This would hint at so called anthropomorphic mental models. Thus the description can be classified by where the perception is located on a bipolar scale with a spectrum reaching from human to technical/artificial. Research of how anthropomorphism is related to the general user experience of smart environments has just started in recent years [18].

With regard to trust, it is worthy to analyze the anthropomorphic characteristics of mental models and relate them to the perceived trust in a system. Kiesler et al. [19] examined how humans create a mental model of a (humanoid) robot. The authors report when people show anthropomorphic characteristics in their mental models of a system they tended to perceive the system as more trustworthy. In the field of smart medical technologies, e.g., Pak et al. [20] reported trust building effects of anthropomorphic characteristics on a diabetes decision-making support aid. Therefore, we expect that users might perceive a system as more trustworthy, if their mental model contains anthropomorphic characteristics.

1.3. Purpose of the Study and Logic of Empirical Procedure

Given the specific characteristics of smart home environments, we have to include trust as a critical factor, which might determine the intention to use smart home applications. As mentioned above trust might be influenced by the perceived anthropomorphism of a technology. Several previous studies have underlined the importance of taking mental models into account. However, a detailed literature review revealed that little is known about mental models in smart home environments. In order to better understand mental models (in terms of anthropomorphic attributions), this article tries to answer how anthropomorphic perception of smart home environments is related with trustworthiness.

To investigate our research question two studies have been conducted:

- 1. The aim of the first study (Study A) was to explore, if users might include more or less human-like characteristics in their mental model of the smart home environment.

- 2. In the second study (Study B) we relate human-like attributions with trustworthiness of smart home environments.

2. Study A: Exploration of Mental Models

2.1. Method

2.1.1. Material

Today, it is well known that mental models develop best through feeling and actively experiencing a technical system (see, e.g., [21] or [22). Therefore, we invited participants into the Future Care Lab [24, 25] (Figure 1), a simulated intelligent home environment at the RWTH Aachen University [23]. Participants were invited to experience and interact with a personal assistance system for heart patients, which was developed in the project "eHealth – Enhancing Mobility with Aging" [27]. The project aimed at developing a "personal assistance system, which enables elderly patients to maintain their mobility and independence despite their chronic diseases and advanced age" ([16], p.1). The evaluation scenario included interactions with two different smart homecare applications (see [16] for more details).

2.1.2. Procedure

The study was divided into four main parts. With regard to the research question addressed in this paper, we will focus our description on the interaction with the smart environment and the interview. First, participants answered a pre-questionnaire, which contained questions regarding different user characteristics. Second, each participant interacted with a home-monitoring application (see Figure 2), which was designed for patients suffering from end-stage heart failures.

Third, participants had to answer a short post-questionnaire regarding their attitude towards the experienced system. Finally, within the interview section, we explored prevalent mental models, i.e., users' perception of smart home environments using the teach-back method. During the interview participants were asked to describe a metaphor or analogy of the previously tested sample application. To ensure a comprehensive understanding of reported metaphors, participants were required to "explain similarities between the scenario and the metaphor or analogy" ([17], p. 435). While most metaphors are little distinctive, participant could generate more than one metaphor and were asked to report which metaphor fits best. Based on the metaphor and analogy, we expected to receive better insights, how participants perceive technology [29].

The interviews (40-50 minutes) were audio-recorded and quotations were translated from German into English. The script was pretested.

2.1.3. Participants

A total of 12 participants (N1.1…1.12), six young adults between 19 and 34 years (M = 27.8, SD = 5.4) and six older adults between 48 and 71 years (M = 60.3, SD = 7.5) took part in the study. The sample was balanced with respect to gender. Most of the older adults belonged to the active part of the workforce and could be regarded as quite healthy. The group covered a broad range of professions, including teachers, economics, clerks, computer scientists and psychiatrists). The majority of participants reported extensive experience using such information and communication technologies (negative skew). At the same time, all participants reported to be inexperienced in using medical technologies. Our participants – invariably German native speakers – were recruited by the authors' existing social networks.

2.1.4. Coding Scheme

As expected, users provided mental models in a variety of formats (e.g., verbal descriptions, sketches and drawings). Fifteen teach-back interviews were transcribed fully verbatim. As we were interested in perceived anthropomorphic characteristics within the language and metaphor of smart home technologies, a coding scheme was developed using a bottom-up approach. Two independent raters reached an inter-rater reliability of .87 on coding scheme anthropomorphism within language and .84 on coding scheme anthropomorphism in metaphor.

In the following, we first describe how responses were coded with regard to the degree of anthropomorphic attributions expressed within the language (anthropomorphism within the language) and then within the metaphor (anthropomorphism in metaphor). Each rater analyzed the degree of anthropomorphic characteristics in two stages. First, the rater read the answers to get an impression of how the participant verbalized technical processes. Second, the rater coded anthropomorphic attributions along three ordinal categories:

- 1. Technical/non-anthropomorphic language/metaphor e.g., "electrodes are connected via LAN to the display" or "like a flat screen with data storage possibilities" (low degree of anthropomorphic attribution)

- 2. Technical/non-anthropomorphic, rudimentary human-like language/metaphor e.g., "the system receives my data and sends my information to a physician" or "like an airbag… which cares about me." (medium degree of anthropomorphic attribution)

- 3. Anthropomorphic, rudimentary technical language/metaphor e.g, "I am data provider" or "like a physician who checks my blood pressure at home." (high degree of anthropomorphic attribution)

2.2. Results

The analysis of transcripts showed that the answers of participants differed concerning their anthropomorphic characteristics used while talking about technology. Three participants attributed remarkable human-like characteristics to the technology (anthropomorphic, rudimentary technical) e.g., "the computer understands this…"(N1.12), "for the computer, I am just a provider of data"(N1.4) or "he [i.e. the pressure sensor of the scale] sent data…"(N1.5). At the same time the descriptions of three participants were classified as technical/non-anthropomorphic, rudimentary human-like. Six participants showed technical/non-anthropomorphic characteristics.

When asked to provide a metaphor of the personal assistance system, participants generated various metaphors, which we categorized according to the degree of anthropomorphism. The most frequently extracted category was technical/non-anthropomorphic (N = 7) (e.g., "… a library, where data in the form of books and folders is stored, administered and accessed." [N1.8]). Respectively, two participants represented a technical metaphor with rudimentary human-like characteristics (e.g., "… an airbag, which is invisible, but cares about me and protects me, if an accident occurs." [N1.3]" and two participants described the assistance system with an anthropomorphic metaphor (e.g., "… a family member, who takes cares of me, knows me and my habits." [N1.4].

We expected that participants with an anthropomorphic language might generate a more anthropomorphic metaphor. Results have shown that a higher degree of anthropomorphism within the language is associated with a higher level of anthropomorphism in the generated metaphor (r = .71; p < .05, Spearman rank analysis). Participants who used more human-like characteristics to describe the technology tended to generate a more human-like metaphor.

2.3. Interim Conclusion

The first study aimed at identifying prevalent mental models of smart home environments. By analyzing the linguistic characteristics and the metaphor, we extracted human-like attributions in the mental models. Results showed that users have different perceptions of smart home environments with regard to the degree of anthropomorphic characteristics, e.g., "he [the pressure sensor of the scale] sends data…"(N1.4) and, "the computer understands this…" (N1.8). Further, we found that more human-like characteristics within the language were positively correlated with a more human-like metaphor. That means, if a participant used a human-like attribution when answering, this was associated with a more human-like characteristic of the metaphor. Consequently, we can confirm Hänke's [30] impression that metaphors are an effective and inspiring way to overcome the abstract and difficult character of computer systems.

However, we have to limit this observation. Despite the fact that both coding schemes reached acceptable reliability scores, correlations of anthropomorphism in language and metaphor only occurred when using ratings of one rater. Scanning the second rater showed no significant results. Nevertheless, the results of the first study confirm those of Kynsilehto and Olsson [18] who found that anthropomorphism might play an important role for the perception of users in the field of smart home environments.

3. Study B: Association of Anthropomorphism and Trust

In the previous study, we explored how users perceive the tested smart home environment with respect to anthropomorphic attributions. This study aims at verifying our prior investigations of how mental models appear (as perceived anthropomorphism). Furthermore, the study tries to answer how human-like attributions are associated with trustworthiness. In this context, we expect that participants who attribute more anthropomorphic characteristics to smart home environments (represented in the metaphor) have lower trust concerns.

3.1. Method

3.1.1. Procedure and Material

Similar to the first study, we used the same test application, components and structure (see Study A for details). In addition, we developed an extended post-questionnaire, which focused on attitudes regarding smart home environments. Participants were asked to rate bipolar statements referring to their attitudes such as privacy, security and trust on continuous rating scales.

Linguistic experts proved comprehensibility and wording of the post-questionnaire. Filling out the questionnaire required approximately 15-20 minutes, while the entire session lasted for about 40 minutes in total.

3.1.2. Variables

3.1.2.1. Independent Variable: Anthropomorphism in Metaphor

As illustrated above, we were especially interested in the perception of the personal assistance system. Therefore, participants had to generate and explain a metaphor or analogy of the system, which we classified according to the degree of anthropomorphism. The attributions were analyzed according to the previously developed coding scheme (see section 2.1.4).

3.1.2.2. Dependent Variable: Trust (T)

As smart environments collect and use information of a user, trust is likely to be an important issue. We define trust as a combination of positive beliefs in the technology, provider and reliance of the system e.g., "I would not trust the information of the system" [9, 11]. A high trust score (max. 10 points) indicates high concerns with regard to the perceived trust.

3.1.3. Reliability and Validity of Scales

Prior to the analysis of data, scale reliability and scale validity of questionnaires were verified. Few items had to be excluded since they did not achieve the criteria. Almost all reliabilities of scales were extraordinarily high and indicated internal consistence of scales. In detail the Cronbach's Alpha value was .85 for trust. Using a principal component analysis with varimax rotation (Kaiser Normalization), we analyzed convergent and discriminant validity. Therefore, we can conclude that all items loaded on correct latent constructs. Finally, six factors were extracted, which explained 81.9% of the variance.

3.1.4. Data Analysis

Twenty-four interviews were transcribed verbatim and analyzed using the previously developed coding scheme for metaphors (see section 2.1.4). Q-Q plots and boxplots indicated that the assumptions of parametric tests were met. Therefore, data was analyzed by independent sample t-test. As anthropomorphism in the metaphor occurred in the form of a dichotomy, we used Pearson correlations as an equivalent to the point-biserial coefficients (cf. [31]). The significance level for t-tests was set to p < .05.

3.2. Results

3.2.1. Participants

In total, N = 24 persons (N2.1…2.24) between 19 and 76 years (M = 45.4; SD = 22.1) participated in the study. The participants came from a broad range of professions (e.g., medicine, economics and engineering) and had no prior experience in using smart environments. The sample was balanced with regard to gender and almost all participants reported to be in good health conditions. Generally, older participants showed lower experience with information and communication technologies compared to younger participants. Although the experience in using medical technologies was generally low, older participants reported a higher experience in contrast to younger participants.

3.2.2. Anthropomorphism

Four responses had to be classified as invalid. Due to these invalid responses, the variable became dichotomous (1=technical/non-anthropomorphic metaphor and 3=anthropomorphic metaphor), which had to be considered in the statistical analysis (see section 3.1.4). Finally, asking to provide a metaphor generated a bimodal distribution of responses. 45% of the mentioned metaphors were classified as technical/non-anthropomorphic metaphors

3.2.3. Trust

The overall ratings show that participants tended to perceive the presented home-monitoring system as trustworthy (M = 30.9; SD = 25.8; Mdn = 29.0). With regard to more specific aspects of trust, the results indicate that participants even have trust in the system when processes are invisible (M = 3.7; SD = 2.7; Mdn = 2.5). Most participants also reported that they feel to be well taken care of (M = 3.7; SD = 2.7; Mdn = 2.5). In contrast, answers with regard to the personal confidence in the stability of the system did not show a clear tendency (M = 5.1; SD = 3.1; Mdn = 5.0).

3.2.4. Association of Anthropomorphism and Trust

In addition, t-tests were performed to analyze whether the degree of anthropomorphism is related with trust (the anthropomorphism in metaphor was treated as the independent variable). Our initial assumption was that people who include anthropomorphic characteristics in their mental representation judge the system as more trustworthy. In order to validate this hypothesis, two-tailed t-tests and Mann-Whitney U tests were used to investigate whether trust was related with the degree of anthropomorphism. Contrary to our expectation, no significant difference could be found. Trust did not show significantly different item and scale ratings between non-anthropomorphic and anthropomorphic metaphors. Therefore, our initial hypothesis could not be supported. To double check the result we compared the degree of anthropomorphism with trust using Pearson correlations, which confirmed the prior results.

4. General Discussion

In this section, the outcomes of both user studies are discussed with respect to the question of whether users include human-like attributions to smart home environments and whether this humanizations is associated with trustworthiness.

4.1. Anthropomorphic Attributions

We analyzed participants' mental models regarding a more or less anthropomorphic perception of the tested personal assistance system. On one hand, the teach-back methodology encouraged participants to talk about technology. On the other hand, the metaphor helped to overcome the abstract and difficult character of the smart environment. Both methodologies made it possible to extract human-like characteristics, either within the language or as a characteristic of the metaphor.

In the first study, we gathered anthropomorphic attributions to technology within the language (e.g., "for the computer, I am just a provider of data"(N1.4) or "he [the pressure sensor of the scale] sends data…"(N1.5)). Here anthropomorphic characteristics within the language were associated with anthropomorphic characteristics of the metaphor.

Focusing on metaphors, we gathered several inspiring metaphors, which might be useful for future interface designs in the context of smart home environments. Moreover, the generated metaphors might be implemented in instruction manuals or used for supporting target-group-oriented trainings. Interestingly, when users were asked to generate a metaphor of the personal assistance system, some did not refer to the technology, but to the room itself, e.g., "a library, where data in the form of books and folders is stored, administered and accesses" (N1.8). This could be explained by the fact that due to the invisible integration of the technology, participants primarily perceived the room and were not aware of the technology.

4.2. Association of Anthropomorphic Perception and Trustworthiness

In a second step, we tried to answer the question, how mental models are influencing attitudes such as trust. Focusing on our hypothesis with respect to human-like perception of the personal assistance system (expressed in the metaphor), we received results contradicting the ones of Kiesler et al. [19] as well as Pak et al. [20]. The perception of anthropomorphic characteristics in the mental model (represented in the metaphor) did not increase the trustworthiness of the technology. There might be two possible explanations for the missing association of anthropomorphic perception and trustworthiness.

The first explanation refers to the appropriateness of the metaphor as methodology. As a result of the metaphor the coding scheme was appropriate to analyze the degree of human likeness. However, the measure scale finally reduced the participants' perception on a dichotomous scale, which might not be sensitive enough to differentiate the real perceived anthropomorphic perception. By using other methods, like for example Language Analysis, Repertory Grid Technique [32] or Semantic Differential [33] we might have had develop a more differentiating scale. This might help to increase the chance to detect any influence of anthropomorphic perception on trustworthiness. In addition, such an alternative scale would be applicable for differential diagnostic statistical testing procedures (e.g. analyses of variance). For further studies a more precise methodology and measure scale is recommended to help assess the anthropomorphic perception of smart home environments and to differentiate the responses in more detail.

The second explanation refers to smart home environment as a research object. Although participants reported human-like associations in the metaphor, the system was not perceived as more trustworthy. Kiesler et al. [19] as well as Pak et al. [20] have investigated systems, which provoked a high degree of anthropomorphism. In our study, we required to generate and explain a metaphor or analogy of the personal assistance system, which is much more complex and less concrete concerning human-like characteristics and therefore allowing less anthropomorphic associations.

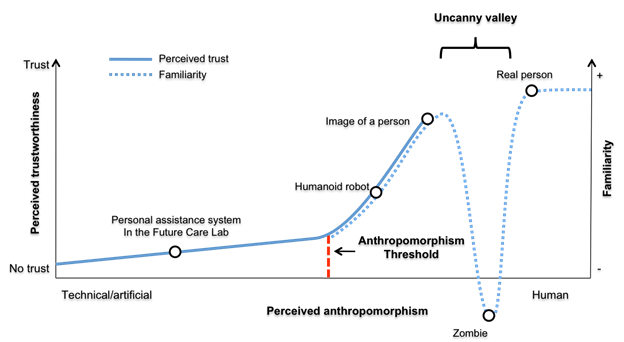

This leads us to the assumption that participants' trustworthiness will not remarkable increase until a certain anthropomorphism threshold of human likeness is reached. As Figure 3 illustrates, we assume a non-linear relation of anthropomorphic characteristics and trustworthiness. Our personal assistance system (left side of the figure) might not have reached the critical trust increasing threshold of human likeness. Therefore, anthropomorphic association in the metaphor was not associated with participants reported perceived trustworthiness. Mori [34] described that familiarity of a technology (e.g., a robot) might increase until a specific point of anthropomorphism of the robots is reached, which creates a point of uncertainty, whether technology is human or not human, termed as uncanny valley (right side of the curve). Based on our results we suggest combining Mori's approach of an uncanny valley (assessing familiarity) with our explanation of an anthropomorphism threshold (assessing trust).

4.3. Future Research

The studies presented in this paper helped to gain first insights into anthropomorphic representations of smart home environments. However, we could not show any relation of anthropomorphism and trustworthiness. Consequently, some limitations need to be addressed in future research.

One limitation refers to the sample. Especially in study one we tested only 12 participants. Based on this we correlated anthropomorphism in language and metaphor. To underline that both methods (teach-back and metaphor) are depending and comprehensive, future studies should test a larger sample an Furthermore, only German citizens were tested. Other cultures might have a different view on technology (see, e.g., [35, 36, 37]. Therefore, results provide only tendencies of insights about mental models of users and their relations to trust.

A second limitation refers to metaphor as a method, which seem to be an oversimplification to assess anthropomorphic perceptions. An alternative method should be applied, which is more suitable to assess more nuanced individual differences in anthropomorphic perception. Especially the investigation of how a participant abstracts technology in general might be promising in order to better understand how anthropomorphic characteristics are built in the users' head. This will lead to more comprehensive knowledge about the relationship of anthropomorphism and trust.

In addition, future studies should use an improved trust scale, since reliability and factor analyses reduced the number of items. As a result, the provided information covered just a small excerpt of our intended constructs. Additionally, the item scoring polarity should alternate. This could increase variance as participants might make use of the entire continuous rating scales and to raise the attention of participants.

5. Final Conclusion

The relation of anthropomorphic perception and its relation with trustworthiness remain an unanswered question. Backed up by earlier research (see, e.g., [18, 19, 20]) it seems worth to investigate the relation of anthropomorphism and trust in the field of smart home environments. We suggest an anthropomorphic threshold, which should be investigated by using a more precise methodology and scale. Still metaphors are an effective and inspiring way to overcome the abstract and difficult character of computer systems.

Acknowledgements

The authors gratefully acknowledge the help of students, visitors of the PC-Café St. Anna and Maryvonne Granowski. Thanks to Richard Pak and Claudia Nick for inspiring discussions on the topic of anthropomorphism and the members of the eHealth research group, who developed the Future Care Lab.

References

[1] C. Röcker "Smart Medical Services: A Discussion of State-of-The-Art Approaches" International Journal of Machine Learning and Computing, 2(3), 2012, 226-230. View Article

[2] C. Röcker, M. Ziefle, A. Holzinger "Social Inclusion in AAL Environments: Home Automation and Convenience Services for Elderly Users" Proceedings of the International Conference on Artificial Intelligence 2011, pp. 55-59, Las Vegas, NV, USA.

[3] P. Nixon, W. Wagealla, C. English, & S. Terzis "Security, Privacy and Trust Issues, In: D. Cook & S. Das (Eds.)" Smart Environments: Technology, Protocols and Applications. 2004, pp. 220-240, London, UK: Wiley. View Article

[4] M. Ziefle, C. Röcker, & A. Holzinger "Medical Technology in Smart Homes: Exploring the User's Perspective on Privacy, Intimacy and Trust" Proceedings of the IEEE International Workshop on Security Aspects of Process and Services Engineering 2011, pp. 410-415. View Article

[5] C. Röcker, M. Ziefle, & A. Holzinger "From Computer Innovation to Human Integration: Current Trends and Challenges for Pervasive Health Technologies" In A. Holzinger, M. Ziefle, C. Röcker (Eds.). Pervasive Health - State-of-the-Art and Beyond. London, 2014, UK: Springer. View eBook

[6] C. Röcker "User-Centered Design of Intelligent Environments: Requirements for Designing Successful Ambient Assisted Living Systems" Proceedings of the Central European Conference of Information and Intelligent Systems (CECIIS'13), 2013, pp. 4-11. View Article

[7] A. Holzinger, M. Ziefle, & C. Röcker "Human-Computer Interaction and Usability Engineering for Elderly (HCI4AGING): Introduction to the Special Thematic Session" In K. Miesenberger, J. Klaus, W. Zagler, A. Karshmer (Eds.). Computers Helping People with Special Needs, Part II, LNCS 6180 2010, pp. 556 – 559, Heidelberg, Germany: Springer. View Article

[8] D.H. Shin "Ubiquitous Computing Acceptance Model: End User Concern about Security, Privacy and Risk" International Journal of Mobile Communications, 8(2), 2010, 169-186. View Article

[9] E. Kaasinen "User Acceptance of Mobile Services - Value, Ease of Use, Trust and Ease of Adoption" Doctoral thesis, Tampere University of Technology, Tampere, Finland, 2005. View Article

[10] R.D. Davis, R.R. Bagozzi, & P.R. Warshaw "User Acceptance of Computer Technology: Comparison of Two Theoretical Models" Management Science, 35(8), 1989, 982-1003. View Article

[11] E. Montague "Trust in health information technology" Proceedings of Workshop on Interactive Systems in Healthcare at Conference on Human Factors and Computing Systems, ACM CHI 2010, 121-124.

[12] M.A. Sasse "How to t(r)ap users mental models" In M. J. Tauber & D. Ackermann (Eds.), Mental Models and Human-Computer Interaction Vol. 2, 1991, pp. 59-79, North-Holland: Elsevier Science Publishers. View Article

[13] K. Manktelow & J. Jones "Principles from psychology of thinking and mental models" In M. M. Gardiner & B. Chistie (Eds.), Applying Cognitive Psychology to User- Interface Design. 1987, Chichester: Wiley.

[14] M.W. Van Someren, Y.F. Barnard, & J.A.C. Sandberg "The think aloud method: A practical guide to modeling cognitive processes" 1994, London: Public Press. View Article

[15] G.C. Van der Veer, & M.C. Puerta Melguizo "Mental models" In J.A. Jacko & A. Sears (Eds.) The Human-Computer Interaction Handbook: Fundamentals, evolving Technologies and emerging applications 2003, pp. 52-80. New Yersey: Lawrence Erlbaum Associates. View eBook

[16] O.S. Sack, & C. Röcker "Privacy and security in technology-enhanced environments: Exploring users' knowledge about technological processes of diverse user groups" Universal Journal of Psychology, 1(2), 2013, 72-83. View Article

[17] D.H. Jonassen, & P. Henning "Mental models: knowledge in the head and knowledge in the world" Proceedings of the 2nd international conference on Learning Sciences, Evanston, Illinois, July 25-27, 1996. View Article

[18] M. Kynsilehto, & T. Olsson "Exploring the Use of Humanizing Attributions in User Experience Design for Smart Environments" Proceedings of the European Conference on Cognitive Ergonomics ECCE 2009, ACM Press, 123-126. View Article

[19] S. Kiesler, A. Powers, S.R. Fussell, & C. Torrey "Anthropomorphic Interactions with a Robot and Robot-Like Agent" Social Cognition 26(2), 2008, 169-181. View Article

[20] R. Pak, N. Fink, M. Price, B. Bass, & L. Sturre "Decision Support Aids with Anthropomorphic Characteristics Influence Trust and Performance in Younger and Older Adults" Ergonomics, 55(9), 2012, 1059-1072. View Article

[21] J. Johnson, & A. Henderson "Conceptual Models: Core to Good Design" 2011, San Rafael, California: Morgan & Claypool Publishers. View eBook

[22] J. Woolham, & B. Frisby, "Building a Local Infrastructure that Supports the Use of Assistive Technology in the Care of People with Dementia" Research Policy and Planning, 20(1), 2002, 11-24. View Article

[23] K. Kasugai, M. Ziefle, C. Röcker, & P. Russell "Creating Spatio-Temporal Contiguities Between Real and Virtual Rooms in an Assistive Living Environment" In J. Bonner, M. Smyth, S. O'Neill, O. Mival (Eds.). Proceedings of Create'10 - Innovative Interactions, 2010, pp. 62-67. View Article

[24] F. Heidrich, M. Ziefle, C. Röcker, & J. Borchers "Interacting with Smart Walls: A Multi-Dimensional Analysis of Input Technologies for Augmented Environments" In Proceedings of the ACM Augmented Human Conference AH'11, 2011, CD-ROM. View Article

[25] F. Heidrich, K. Kasugai, C. Röcker, P. Russell, & M. Ziefle "roomXT: Advanced Video Communication for Joint Dining over a Distance" In Proceedings of the 6th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth'12), IEEE Press, 2012, pp. 211-214. View Article

[26] M. Ziefle, C. Röcker, W. Wilkowska, K. Kasugai, L. Klack, C. Möllering & S. Beul "A Multi-Disciplinary Approach to Ambient Assisted Living" In: C. Röcker & M. Ziefle, (eds.). E-Health, Assistive Technologies and Applications for Assisted Living: Challenges and Solutions 2011, pp. 76 – 93, Hershey, P.A.: IGI Global. View Book

[27] M. Ziefle, C. Röcker, K. Kasugai, L. Klack, E.M. Jakobs, T. Schmitz-Rode, P. Russell, & J. Borchers "eHealth – Enhancing Mobility with Aging" In: M. Tscheligi, B. de Ruyter, J. Soldatos, A. Meschtscherjakov, C. Buiza, W. Reitberger, N. Streitz, & T. Mirlacher (Eds.): Roots for the Future of Ambient Intelligence, Adjunct Proceedings of the Third European Conference on Ambient Intelligence 2009, AmI'09, pp. 25-28. View Article

[28] L. Klack, K. Kasugai, T. Schmitz-Rode, C. Röcker, M. Ziefle, C. Möllering, E.-M. Jakobs, P. Russell, & J. Borchers "A Personal Assistance System for Older Users with Chronic Heart Diseases" In: Proceedings of the Third Ambient Assisted Living Conference 2010, AAL'10, Berlin, Germany: VDE. View Book

[29] J. Goschler "Metaphern für das Gehirn. Eine kognitiv-linguistische Untersuchung" 2008, Berlin: Frank und Timme [in German]. View eBook

[30] S. Hänke "Anthropomorphisierende Metaphern in der Computerterminologie Eine korpusbasierte Untersuchung" In: N. Fries & S. Kiyko (Eds.): Linguistik im Schloss. Linguistischer Workshop Wartin 2005, Czernowitz, 35-58 [in German]. View Article

[31] D.C. Howell "Statistical Methods for Psychology" 2006, Belmont, CA: Wadsworth. View eBook

[32] F. Fransella, R. Bell, & D. Bannister "A Manual for Repertory Grid Technique" 2004, Chichester: Wiley. View eBook

[33] D.R. Heise "Some Methodological Issues in Semantic Differential Research" Psychological Bulletin, 72(6), 1969, 406-422. View Article

[34] M. Mori "Bukimi no tani [the uncanny valley]" Energy, 7, 1970, 33-35. >

[35] F. Alagöz, M. Ziefle, W. Wilkowska, & A. Calero Valdez "Openness to accept medical technology – a cultural view" In H. Holzinger & K.-M. Simonic (Eds.): Human-Computer Interaction: Information Quality in eHealth 2011, pp. 151-170, Berlin, Heidelberg: Springer. View Article

[36] C. Röcker "Information Privacy in Smart Office Environments: A Cross-Cultural Study Analyzing the Willingness of Users to Share Context Information" In D. Tanier, O. Gervasi, V. Murgante, E. Pardede, B. O. Apduhan (Eds.): Proceedings of the International Conference on Computational Science and Applications 2010, pp. 93-106, Heidelberg, Germany: Springer. View Article

[37] C. Röcker, M. Janse, N. Portolan, N.A. Streitz "User Requirements for Intelligent Home Environments: A Scenario-Driven Approach and Empirical Cross-Cultural Study" Proceedings of the International Conference on Smart Objects & Ambient Intelligence 2005, pp. 111-116, ACM Press. View Article