Volume 2, Year 2014 - Pages 18-27

DOI: 10.11159/vwhci.2014.003

A New Flexible Augmented Reality Platform for Development of Maintenance and Educational Applications

Héctor Martínez¹, Seppo Laukkanen¹, Jouni Mattila²

¹SenseTrix. PL 20, 00101 Helsinki, Finland

hector.martinez@sensetrix.com; seppo.laukkanen@sensetrix.com

²Tampere University of Technology. PO Box 527, FI-33101 Tampere, Finland

jouni.mattila@tut.fi

Abstract - Augmented Reality (AR) is a technology that has gained an increasing interest during last years. Several fields (e.g. maintenance and education) have already started to integrate AR systems in their procedures. The preliminary results obtained from those works show a promising future for the technology. However, the number of tools that allow non-programmers to create new applications is still limited. Moreover, the great majority of these tools have still some limitations in terms of flexibility and reusability. The purpose of the platform presented in this paper is to face those problems and provide new means of AR application development. The main target of the platform is to serve as a unified system for aiding operators in the maintenance procedures in large scientific facilities. However, an important goal is that the platform can be flexible enough so it can be adapted to other fields. In order to demonstrate this flexibility, the platform has been also used to develop educational applications and a commercial educational platform.

Keywords: Mobile application, therapy, schizophrenia.Augmented Reality, maintenance, scientific facilities, education.

© Copyright 2014 Authors - This is an Open Access article published under the Creative Commons Attribution License terms. Unrestricted use, distribution, and reproduction in any medium are permitted, provided the original work is properly cited.

Date Received: 2013-12-30

Date Accepted: 2014-02-09

Date Published: 2014-03-10

1. Introduction

Augmented Reality (AR) is a technology that combines images of real environments with virtual objects displayed on top of these images in real time. This combination allows the creation of interactive applications where a large amount of virtual information coexists with the real environment. As a result of the features provided by AR technology, two fields that can take a great advantage of the extended information and the interactivity capabilities are maintenance and education.

Although the technology has drawn the interest of many experts from different fields, there is usually a gap between the content value, which relies on the experts, and the application development, performed typically by developers that are not related to the application target field. Usually, a great collaboration effort is required to develop an AR application targeted to one specific field. If we think of maintenance, for example, the tasks to carry out are well known by operators and other experts in the facility. However, developers from an external software company usually do not have knowledge about the tasks or devices to maintain. Moreover, the material to be used for the augmentation (e.g. 3D models, manuals, videos, etc.) is developed and stored in facilities computers and there is a need for appropriate format conversion and transfer of the files to the software developers in order to be used in the final application.

Some tools have arisen in recent years to fill the gap. These tools can be used with little programming skills (although some of them still require some knowledge) and would allow experts to develop their own applications. However, there are still some drawbacks that prevent the generalization of the technology. The majority of the tools lack of flexibility and appropriated features to be used in large scale projects where hundreds or even thousands of applications need to coexist.

The work presented in this paper tries to cope with these limitations by means of an AR platform that includes an AR engine and an authoring tool, as well as some novel features oriented to the creation of maintenance AR applications for large scientific facilities as well as to provide flexibility for the development of AR applications for different fields, such as education.

2. Related Work

Maintenance is one field where Augmented Reality can play an important role [1]. The possibility of guiding operators with virtual instructions displayed over the devices to maintain makes AR a suitable technology to enhance the maintenance procedures. There are currently several works in the literature that use this technology for that purpose (e.g. [2], [3], [4]). However, the main output of those works is usually one prototype that is used in one case only which implies a lack of a reusable general purpose Augmented Reality-based maintenance system which is usually required in industrial environments [5].

Education is another field that has already seen the benefits of using AR (e.g. [6], [7], and [8]). Students find the learning process funnier and more interesting when reality is mixed with virtual elements [9], [10]. As in maintenance field, the majority of developed AR applications are prototypes for one use only and they are not usually reusable.

As stated before, there is a common problem in the development of AR applications. Programmers have the knowledge for the creation of AR applications, but they lack of content knowledge (e.g. pedagogical educational content). On the other hand, specialists from the fields (e.g. maintenance operators in facilities and educators) have the knowledge of the content and how to use it, but usually have little experience in programming. Nowadays there are already some authoring tools that allow the creation of AR applications for several purposes (e.g. [11], [12], and [13]). However, these applications still require the programming of small scripts or XML descriptions. There are also some applications that allow the development without the need of programming skills (e.g. [14]). Although these applications are good enough for individual prototypes, they lack of some interactivity features and in some cases they are restricted to the visualization of 3D models only. Moreover, there are some cases where large amounts of tracking markers and a great flexibility are needed (e.g. large facilities where hundreds or thousands of devices need to be maintained and where the instructions for the maintenance may change along the time).

The platform proposed in this paper aims to provide a flexible framework to focus in the content development instead of spending time and effort in developing individual prototypes. The platform consists of a common AR engine with a large number of features and an authoring tool to easily create the desired applications. The goal is that through the use of an intuitive interface, every user, regardless his/her programming skills, is able to develop AR applications that will make use of the AR engine. Moreover, a database and a new AR marker approach [15] have been also included in the platform to allow the flexibility and coexistence between AR applications.

The main target of this platform is its use in the maintenance field, as the development has been achieved inside PURESAFE project [16]. The goal is to aid maintenance operators in large scientific facilities such as CERN or GSI in the maintenance procedures. The AR applications for maintenance guide operators to successfully perform their tasks (either by human intervention or by remote handling) saving time and costs, as well as providing safer methods for them. Additionally, thanks to the previous knowledge in AR for educational purposes of the main researcher [17], the platform has been also adapted to create an educational platform which is already commercially available [18].

The remainder of the text is as follows: chapter 3 details the proposed platform, including the developed engine and authoring tool as well as the AR applications. In chapter 4 some results in form of applied examples are presented. Finally, section 5 presents some conclusions.

3. Materials and Methods

In this chapter a general overview of the platform is presented. The platform comprises an engine and an authoring tool. The engine is in charge of running the final AR applications that have been created using the authoring tool. The architecture of the engine, the authoring tool and the description of the AR applications are detailed in the following sections.

3.1.Engine Architecture

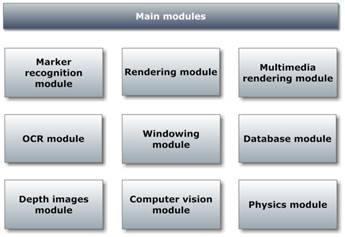

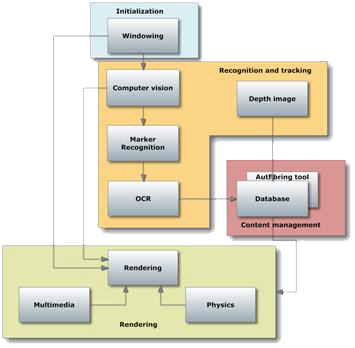

The engine has been completely developed using C++. A large number of different libraries have been integrated into the engine. Two of the most important libraries inside the engine are ARToolKit [19], a well-known library for AR marker tracking, and OpenSceneGraph [20], which is a powerful 3D graphics API. These two libraries deal with the two common challenges in any AR application: the understanding of the environment and the rendering of virtual content. However, as additional features have been introduced into the engine, the number of libraries integrated is large, as mentioned before. For this reason, the engine has been built as a modular engine in order to easily integrate new functionalities. Figure 1 shows the main modules of the engine.

The engine has been designed as a component-based architecture where the components (i.e. the modules) offer several interfaces (e.g. session configuration, tracking information, etc.) in order to allow the communication between them. This approach allows flexibility as components can be reused and/or substituted by new ones if needed (e.g. when a newer and more robust Marker recognition module has been developed or if there is a need to change the Rendering module for a more powerful one). The modules have been integrated into higher level components that correspond to the phases explained later and shown in Figure 2.

The engine supports the creation of new applications by a plug-in system (integrated in the Windowing module) where every new application is considered as a new plug-in. The engine contains an abstract class that plug-ins need to implement. The methods provided by the abstract class allow setting up the application (i.e. loading the tracking system and the required virtual elements), updating the values of variables according to the AR tracking and user input and displaying the resulting AR scene. The engine also offers the methods provided by the modules presented in Figure 1 allowing every application to use them when needed. This design provides great flexibility and allows the creation of independent applications that can coexist in the same working environment.

The plug-in system has been designed following a database-centric architecture where the use of databases and the aforementioned Database module allows the definition and edition of the content and behaviour of the application. Moreover, if two AR applications require the same global behaviour (e.g. using the same tracking system, the same interaction capabilities, etc.), they can be developed as a single plug-in that makes use of two different databases where the specific information is stored.

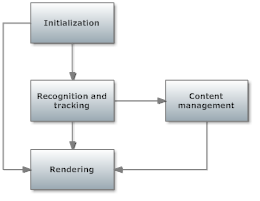

The engine has four main phases which correspond to the high level components mentioned earlier: Initialization, Recognition and tracking, Content management and Rendering. The relationship between the four phases and the engine modules can be seen in Figure 2.

During the Initialization phase, the windowing module is in charge of displaying the options to the user in order to allow the selection of the configuration for the current session of the AR application.

Once the user has selected the right configuration, the Recognition and tracking phase uses this information to set up the video feed and start the recognition and tracking. The engine accepts as input different video options, such as live video from a camera, pre-recorded video, images or depth video. Regardless the video input, the engine uses the images to detect markers visible on them. The engine also integrates the possibility of using a multimarker setup in order to provide more accuracy in some cases where one of the markers can be hidden (e.g. due to maintenance procedure) or when light conditions may render proper tracking of markers more difficult.

The engine is able to detect two different kinds of markers. The first type of marker is a 2D barcode marker, which is a traditional approach in AR applications. The second type of marker is a novel marker approach that has been designed for the proposed platform [15]. This novel approach consists of a hybrid marker that uses 2D barcode markers together with a text coding system. The marker is first detected using traditional algorithms for marker detection. With the position and orientation of the marker, the text is segmented from the image and read using Optical Character Recognition (OCR) techniques. Finally, the output is the position and orientation information combined with a unique id that encrypts, together with the database system, the necessary information for the augmentation. The goal of this second type is to overcome the limits of maximum number of unique 2D barcode markers, allowing thus more flexibility to the engine, as a higher number of unique markers can be used within the same application. This is especially important in maintenance field, as a large facility may need a high number of unique markers in order to uniquely identify all the devices that need to be maintained using an AR application.

When any of the aforementioned markers has been detected, the engine looks for the appropriate content to augment the scene in the Content management phase. The engine contains a database module where all the information is stored. The databases can be created and edited by using the authoring tool developed for the purpose. The use of the database module provides great flexibility, as the virtual content information can be edited outside of the source code of the engine (i.e. there is no need for changing the code of the engine to adapt it to new applications). Moreover, the virtual content that will be used for the augmentation can be stored locally or in a net storage system.

Finally, during the Rendering phase, the images obtained for the tracking are augmented by the rendering module using the information provided by the database module.

3.2. Authoring Tool

In order to allow the easy creation of AR applications that make use of the engine, an authoring tool has been developed. The authoring tool has been implemented using Qt [21].

The authoring tool allows the creation of individual AR applications and a set of maintenance tasks:

- Individual AR applications: These applications are not related to other applications and they can be run independently.

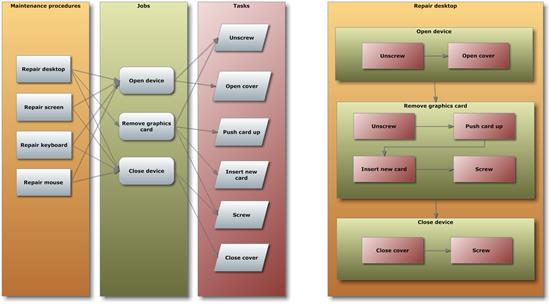

- Set of maintenance tasks: This is a special feature thought for maintenance field. As maintenance procedures usually comprise several tasks, there is a need for combining all these tasks into the same application. To cope with this requirement, the authoring tool allows the definition of three elements: maintenance, job and task.

- Maintenance: Every maintenance element is an individual maintenance procedure and contains one or more jobs.

- Job: Every maintenance procedure can contain one or more different jobs that need to be carried out. Every job contains one or more tasks.

- Task: A task contains all the steps and instructions that need to be performed in order to complete the maintenance. Every task can be considered as an independent AR application. However, the different tasks are connected through one or more jobs. Every task is made up of several virtual elements that are displayed when the maintenance worker is using the AR application.

Figure 3 shows an example of the interconnections between the different options inside the set of maintenance tasks. The example depicts a simplified computer repairing company. In the list of maintenance procedures appear all the different maintenance procedures that the company performs (repair desktop, repair screen, repair keyboard and repair mouse). Every maintenance procedure contains one or more jobs that can be common (e.g. Open device) or specific for each maintenance procedure (e.g. Remove graphics card). Finally, for every job, there is a set of tasks that can be shared by several jobs (e.g. Unscrew) or specific for the current job (e.g. Insert new card). The right side of Figure 3 shows the final flow of one maintenance procedure, containing a set of jobs that, in turn, encompass a set of tasks.

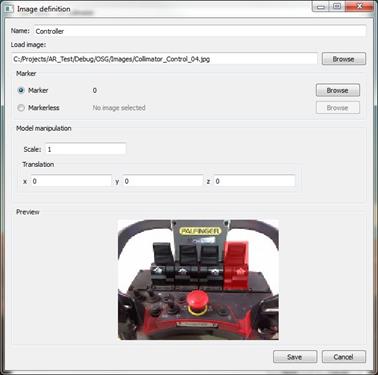

As stated before, the authoring tool has been implemented in order to be intuitive and easy to use. The application contains several windows for the definition of the different virtual elements, as well as maintenance, jobs and tasks definitions. Figure 4 shows an example of an image definition. The user can select the image to display in the AR scene, edit some properties and see a preview of the selected file. In future, the tool will be enhanced in order to include more properties and features.

3.3. AR Applications

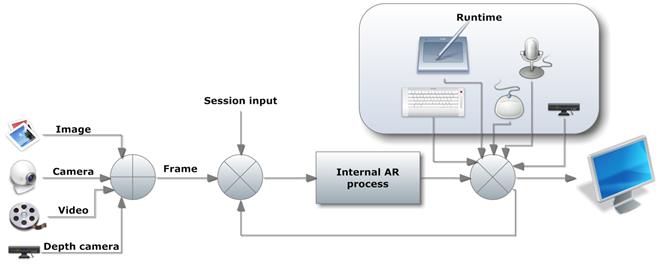

This section describes the interaction between the user and the AR application. Figure 5 shows a schematic view of the interaction flow in the application.

The application starts with a Graphical User Interface (GUI) window where the user selects the session configuration. The session consists of the selection of the application to run whether it is an AR guided maintenance procedure or a normal AR application. This information is stored internally in the database system and displayed in the GUI allowing the user to select the appropriate input. In the GUI, the user is also able to select the image input. The image input tells the system where the source of frames for the AR tracking and for the final display is. As mentioned before, there are four possible choices:

- Camera: This is the most common option, as the AR applications are used in real time. The camera can be either a built-in camera, a webcam, a camera attached using the USB port or an IP camera connected to the network.

- Video: In some cases it may be needed to use a pre-recorded video instead of using a live video feed (e.g. when the maintenance instructions need to be redesigned or for supervision process).

- Image: Finally, there might be some cases where the use of static images is needed (this is useful for the creation of the maintenance instructions).

- Depth camera: It is also possible to select a depth camera (e.g. Kinect camera) for the input. If this option is selected, the RGB (Red, Green and Blue, i.e. traditional colour images) and the depth images from the camera are used as input of the AR process. This feature is currently experimental only.

All information provided in the GUI window is fed to the system where the internal processes explained in the engine description take place. The output of this process is combined with the runtime user input to both produce the final output and to feedback the internal process.

The user can interact with the application in several ways. Depending on the hardware, the user can interact with keyboard and mouse (e.g. a traditional desktop or laptop), with touch screen (e.g. tablet, mobile phone or other touchable devices) or with voice commands (this feature is currently planned, through a speech recognition system, but not implemented). If the depth camera has been selected previously, the input from the depth image can be also used at this stage (e.g. gesture recognition).

The final display is a multimodal augmented scene where the real scene is combined with virtual elements (3D models, images, videos, texts, webpages and voice commands). The final display has been designed to be user friendly and to provide as much information as needed without distracting the user from the actual maintenance procedure. For this reason, two HUD (Head-Up Display) menus have been implemented in the application. Both menus can be shown or hidden as requested by the user. The first HUD menu is a menu displayed in the bottom part of the image. This HUD has been designed to display the instructions and additional information that cannot be displayed as AR content for several reasons, such as the situation when there is no relationship with physical objects or the display of objects not visible in the scene. The second HUD menu, called help menu, is a menu that overlays the whole screen as it is intended to be used for application configuration (e.g. AR threshold selection or filtering switching) and therefore it is only used to adjust the application. This menu is, however, semi-transparent, allowing the viewing of the AR scene in the background. A typical screenshot of the application is shown in Figure 6.

As it has been explained in this section, there are several means of Human Computer Interaction (HCI) while using the application. Apart from those mentioned before, it is also important to point out here the inherent interactivity character of AR applications. The use of markers allows a high level of interaction as users can directly manipulate the virtual objects that are displayed on top of them (e.g. moving them freely in 3D space or pausing and playing videos). This is especially important in the education field, as it allows students to interact with the learning content and thus learning by doing [22].

4. Results

Several applications have been already developed using the proposed framework. Some applications for maintenance purposes have been already implemented for demonstration purposes. Figure 7 shows two examples of AR applications for maintenance. The upper image in Figure 7 shows guiding instructions to remove the battery for a mobile phone. The application explains, step by step, the procedure required to disassemble a mobile phone in order to exchange the SIM card. This application was one of the first fully functional applications created with the platform and it was developed for demo purposes as it can be easily carried to the facilities where the use of AR maintenance has to be demonstrated. Although the exchange of a SIM card is usually a simple issue, the application shows the potential of AR guiding for tasks that require several steps, such as maintenance procedures.

The bottom image (Figure 7) illustrates an example of AR remote maintenance where guiding instructions to collimator exchange at CERN are provided to the operator. The application guides maintenance operators to achieve the remote exchange of a collimator inside Large Hadron Collider (LHC) at CERN by means of keypoint guiding. The operator controls a crane to remove the old collimator and to install the new one. During the process, several helping elements are augmented over the real scene or displayed in a HUD as needed. The application was successfully tested in a mock-up at CERN.

The framework has been also used for educational purposes. Figure 8 shows two examples of AR for educational purposes. In the upper image (Figure 8), an example of planetary educational application is displayed. The distances between markers were used in order to teach the distances between planets and their rotational behaviour. The application also shows the two rotational behaviours of the Earth (i.e. rotation and translation around the Sun) and several textures can be selected in order to provide different views of the planet, such as level of water or the view of the Earth at night.

In the bottom image (Figure 8), an example of a mathematical AR application is shown. The application consists of several games where mathematics skills are tested (e.g. asking for the results of operations) and mathematics-related memory games (e.g. remembering the prime numbers) are offered. There are ten big physical markers on the floor corresponding to numbers from 0 to nine. The goal is to hide those numbers included in the answer to the question that has been provided by the application. This application was designed to be used in a large screen with a projector for the Mathematics day in Heureka, the Finnish Science Centre.

As it can be seen from the previous examples, the platform has been already used with positive results in maintenance and education fields. Moreover, the framework has been used to develop an already available commercial application, called STEDUS [18]. STEDUS is an educational platform that provides educational institutions access to AR applications. The AR applications work using the developed engine, while the development of these applications has been made by using the proposed authoring tool.

5. Discussion

This paper presents a new platform for AR application development. The platform consists of an engine in charge of the application running and an authoring tool for the development of the applications. The platform has been built to integrate the majority of common AR features (e.g. displaying of virtual elements or tracking of markers) and includes some novel features, such as a new AR hybrid marker approach combined with a database system to uniquely identify a large number of devices to maintain in the same facility (e.g. large scientific facilities), several means of interactivity combined into the same tool (e.g. multimodal augmentation and maintenance specific step guiding system) and a maintenance-job-task-oriented structure for maintenance purposes.

The platform tries to overcome some drawbacks in the current AR systems for maintenance and education, such as flexibility and the use in large scale projects. The platform has already demonstrated its flexibility as it has been applied not only to the main target (i.e. maintenance in scientific facilities), but also to the educational field. The platform can be easily integrated into large scale projects (e.g. large scientific facilities, such as CERN or GSI) by making use of the database system and the new AR marker approach that have been integrated in the system. Moreover, the platform has been built following a plugin approach that enables the coexistence of multiple AR applications in the same environment sharing a common engine.

Another important advantage of the platform is that allows non-programming users to develop AR applications by means of utilizing the implemented authoring tool. The tool can be used for general purpose application development, but it is especially useful in maintenance field, as a building feature based on maintenance-job-task structure has been designed for this purpose. As a consequence, the authoring tool can be delivered to the maintenance facilities (or any other organization, such as educational institutions) in order to allow experts (e.g. maintenance experts, educators, etc.) to develop AR applications based on their needs and requirements. Furthermore, the applications can be updated (e.g. new 3D models need to replace old ones, some steps of a maintenance procedure have been removed or replaced, etc.) by using the authoring tool without the need of changing the code which enables a long life cycle of the application without the intervention of an external software company. Therefore, the need of dealing with technical issues related to AR technology is eliminated and experts can concentrate their efforts in applying their knowledge (e.g. maintenance procedures, pedagogical learning, etc.) to the content of the application.

The platform has been successfully used for the development of several applications both in desk-size demos and in real in-place applications (e.g. CERN and Heureka). Moreover a commercial solution is making use of the proposed platform for the creation and execution of AR applications for educational purposes. In a future it is foreseen that new fields (e.g. marketing, tourism, etc.) could benefit from the use of the platform due to its flexibility.

The next steps for the platform are to develop further the authoring tool in order to include new features that enhance the maintenance, especially in those cases where the maintenance has to be done remotely. Some examples that are currently being studied are the guiding of robotic arms and mobile robots. The purpose is to analyse the benefits that AR applications could bring to such kind of systems and to gather the requirements that may be needed. Once this step has been carefully evaluated, the output will be used for implementing the new features in the authoring tool.

Also, the features that are experimental at this stage (i.e. depth camera and voice commands) will be studied further in order to finally integrate them in the most suitable way. The information from the depth camera can be useful to detect gestures of the operator (current testing), but also to detect 3D spatial information of the devices and the environment. The voice command feature (i.e. text to speech feature which provides voice commands from a predefined text), which is already working in the engine, will be integrated into the authoring tool to enable the users of the tool to define the commands to be augmented in the final application. Moreover, there is also a plan to integrate a speech recognition system (i.e. a system that recognizes the user's voice) in the engine in order to allow bidirectional voice commands.

Acknowledgements

This work was carried out in project "Preventing hUman intervention for incrREased SAfety in inFrastructures Emitting ionizing radiation (PURESAFE)" funded by the 7th Framework Program of the European Union under the Marie Curie Actions - Initial Training Networks (ITNs).

References

[1] M. Hincapie, A. Caponio, H. Rios, and E. Gonzalez Mendivil, "An introduction to Augmented Reality with applications in aeronautical maintenance" in Transparent Optical Networks (ICTON), 13th International Conference on, pp. 1–4, 2011.View Article

[2] S. Benbelkacem, N. Zenati-Henda, M. Belhocine, A. Bellarbi, M. Tadjine, and S. Malek, "Augmented Reality Platform for Solar Systems Maintenance Assistance," in Proceeding of the International Symposium on Environment Friendly Energies in Electrical Applications. In: EFEEA, 2010.View Article

[3] F. De Crescenzio, M. Fantini, F. Persiani, L. Di Stefano, P. Azzari, and S. Salti, "Augmented reality for aircraft maintenance training and operations support," Computer Graphics and Applications, IEEE, vol. 31, no. 1, pp. 96–101, 2011.View Article

[4] S. Henderson and S. Feiner, "Exploring the benefits of augmented reality documentation for maintenance and repair," Visualization and Computer Graphics, IEEE Transactions on, no. 99, p. 1–1, 2010.View Article

[5] F. Doil, W. Schreiber, T. Alt and C. Patron, "Augmented Reality for Manufacturing Planning," in Proceedings of the Workshop on Virtual Environments, pp. 71–76, 2003.View Article

[6] A. Balog, C. Pribeanu, D. Iordache, "Augmented Reality in Schools: Preliminary Evaluation Results from a Summer School" International Journal of Social Sciences, vol. 2, no. 3, pp. 163—166, 2007. View Article

[7] C.H. Chen, C.C. Su, P.Y. Lee, F.G. Wu "Augmented Interface for Children Chinese Learning" In: 7th IEEE International Conference on Advanced Learning Technologies, pp. 268—270, 2007.View Article

[8] H. Kaufmann, A. Dünser "Summary of Usability Evaluations of an Educational Augmented Reality Application" Virtual Reality, pp. 660—669, 2007. View Article

[9] V. Lamanauskas, C. Pribeanu, R. Vilkonis, A. Balog, D. Iordache, y A. Klangauskas, "Evaluating the Educational Value and Usability of an Augmented Reality Platform for School Environments: Some Preliminary Results," Proceedings of 4th WSEAS/IASME International Conference on Engineering Education (Agios Nikolaos, Crete Island, Greece, 24-26 July, 2007). Mathematics and Computers in Science and Engineering, Published by World Scientific and Engineering Academy and Society Press, 2007, págs. 86–91.View Article

[10] C. Juan, F. Beatrice, y J. Cano, "An augmented reality system for learning the interior of the human body," Eighth IEEE International Conference on Advanced Learning Technologies, 2008, págs. 186–188.View Article

[11] H. Seichter, J. Looser, y M. Billinghurst, "ComposAR: An intuitive tool for authoring AR applications," Mixed and Augmented Reality. ISMAR 2008. 7th IEEE/ACM International Symposium on, 2008, págs. 177–178, 2008. View Article

[12] B. MacIntyre, M. Gandy, S. Dow, y J.D. Bolter, "DART: a toolkit for rapid design exploration of augmented reality experiences," Proceedings of the 17th annual ACM symposium on User interface software and technology, págs. 197–206, 2004.View Article

[13 ]F. Ledermann y D. Schmalstieg, "APRIL a high-level framework for creating augmented reality presentations," 2005. View Article

[14] M. Haringer y H.T. Regenbrecht, "A pragmatic approach to augmented reality authoring," Mixed and Augmented Reality, 2002. ISMAR 2002. Proceedings. International Symposium on, págs. 237–245, 2002. View Article

[15] H. Martínez, S. Laukkanen and J. Mattila, "A New Hybrid Approach for Augmented Reality Maintenance in Scientific Facilities," International Journal of Advanced Robotic Systems, Manuel Ferre, Jouni Mattila, Bruno Siciliano, Pierre Bonnal (Ed.), ISBN: 1729-8806, InTech. View Article

[16] PURESAFE. View Website

[17] H. Martínez, R. del-Hoyo, L.M. Sanagustín, I. Hupont, D. Abadía and C. Sagüés, "Augmented Reality Based Intelligent Interactive E-Learning Platform," Proceedings of 3rd International Conference on Agents and Artificial Intelligence (ICAART), vol. 1, pp. 343-348, 2011. ISBN: 978-989-8425-40-9. View Article

[18] H. Martínez and S. Laukkanen, "STEDUS, a new educational platform for Augmented Reality applications," 4th Global Conference on Experiential Learning in Virtual Worlds, 2014. View Website

[19] H. Kato and M. Billinghurst, "Marker tracking and hmd calibration for a video-based augmented reality conferencing system," in Proceedings. 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR), pp. 85 – 94, 1999. View Article

[20] OpenSceneGraph.View Website

[21] Qt. View Website

[22] G. Gibbs, G. Britain, and F. E. Unit, Learning by doing: A guide to teaching and learning methods. Further Education Unit, 1988. View Article